We have all witnessed recent incidents that underscore the inherent fragility of IT systems.

First, let me express my understanding of the trust placed in security agents deeply integrated within the OS to bolster system security. These agents, provided by reputable vendors, undergo rigorous testing to minimize potential issues, especially in high-impact scenarios.

However, despite our best efforts and the most stringent procedural tests, issues can still arise. After all, we are human, and errors are an inevitable part of our reality. I sympathize deeply with those affected by such incidents, recognizing the immense pressure and responsibility they bear.

Incidents like this occur daily in various organizations, often on a smaller scale due to auto-updates.

We must take a step back and consider how we can mitigate these issues in the future.

While it might be tempting to lay all the blame on CrowdStrike, it’s essential to remember that both companies and end-users share the responsibility for minimizing the impact of such incidents.

Here are several key points to consider.

🗃️ The organizational perspective

First and foremost, establish robust testing stages. Implement processes to thoroughly test updates in automated environments before deploying them to production. When it comes to backup, implementing comprehensive backup strategies is critical in ensuring critical data and systems are backed up across multiple versions and locations.

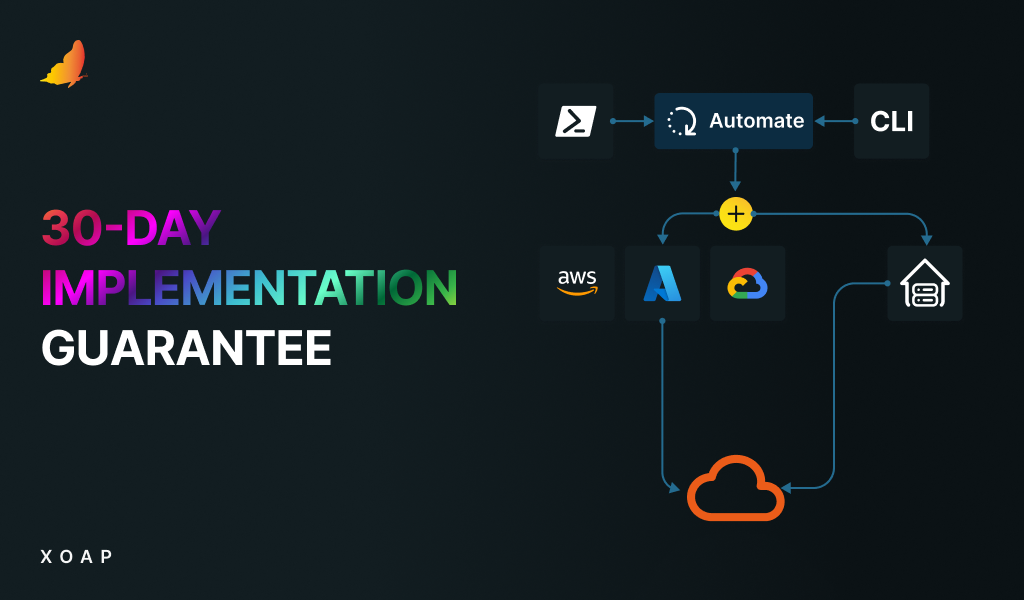

In the event of total failure, you will need a clear, automated plan for redeploying your entire infrastructure. Develop redeployment plans and avoid the pitfall of treating server setup as a one-time task—automation is crucial. Moreover, pay attention to desktop readiness. Leverage available solutions to ensure desktops can be restored and operational within hours.

If you haven’t yet, adopt contemporary deployment methodologies and agile practices. Following incidents, conduct thorough postmortem analyses to identify and implement improvements.

💻 The technical perspective

Avoid “latest” and auto-updates on critical systems. While convenient, these can introduce unforeseen issues, so exercise caution.

Testing should be done in controlled environments. Deploy nothing to production without thorough testing in a staging environment. Testing should also be done regularly. Conduct regular, comprehensive tests of your automation and deployment processes—not just annually.

To ensure rapid deployment in disaster scenarios, automate your infrastructure as much as possible and consider implementing automated testing. Testing processes enhanced with automation will catch issues early. For quicker recovery and reduced attack surfaces, transition critical infrastructure to containers and Kubernetes. Simultaneous failures can be mitigated by distributing infrastructure and critical components across multiple cloud providers and geographical locations.

When it comes to standby snapshots, go cold. Maintain replicated, versioned snapshots of crucial backend systems and data as cold standby, since hot standby can replicate corrupted data.

And last but not the least, rethink your security measures. Explore innovative security solutions that reduce dependency on full-blown desktops.

What do you think?

Before the end of this blog, I also want to highlight a particularly smart approach to addressing these issues, developed by Helge Klein and the uberAgent team: UberAgent Driver Safety Net Feature. Check it out!

If there’s anything I missed or if you have a different perspective, feel free to share your thoughts here. 💬